This construction and engineering firm is set become a key player as hyperscalers look to get the most out of their data centers

The AI revolution is boosting demand for the construction of power-intensive data centers, necessitating massive capital investment, which is expected to balloon to nearly $7 trillion by 2030.

As AI models become more advanced, they are becoming more energy-intensive, forcing companies to seek more efficient systems to operate their technologies. In addition to creating more efficient chips, hyperscalers are also recognizing the need to make their data centers more efficient, from cooling to energy transfer.

In the midst of this race towards power generation and efficiency gains, engineering and construction firm EMCOR Group (EME) is uniquely positioned to capitalize, as it has established itself as a key resource for hyperscalers looking to get the most out of their data centers.

As investment into data centers continues to rise, this company has established itself as an early beneficiary of these tailwinds.

Investor Essentials Daily:

Friday News-based Update

Powered by Valens Research

When the words artificial intelligence (“AI”) come to mind, what’s often thought of are the algorithms, data, software, and models that enable AI tools like ChatGPT, Gemini, Perplexity, and Claude to work and generate outputs based on user prompts.

However, what’s often left out in this narrative is the vast physical infrastructure that serves as the backbone of today’s AI boom: data centers. These sprawling facilities house thousands of servers that store data and perform vast amounts of complex computations needed to make AI tools run.

More importantly, these data centers need vast amounts of power not only to sustain system operations but also their accompanying cooling systems.

Take xAI’s Colossus supercomputer for example. This massive, 785,000 square foot facility is used to develop and maintain the current and future iterations of X’s Grok AI model. And it consumes energy that’s enough to power more than 150,000 homes.

Demand for AI and cloud computing is driving hyperscalers such as Microsoft (MSFT), Meta Platforms (META), Alphabet (GOOG), and Amazon (AMZN) to construct power-hungry data centers to power their advanced AI models.

By 2030, capital expenditure on data center infrastructure is expected to balloon to almost $7 trillion.

These sprawling facilities will either siphon energy from a power grid or generate power on-site, necessitating the massive capital investment into energy sources such as gas, renewable energy, or emerging technologies like small modular nuclear reactors.

In 2023, data centers accounted for 4% of electricity consumption in the U.S. By 2030, this number is expected to rise to 10%. As data centers increasingly become a larger part of the overall energy demand, hyperscalers are searching for ways to make their energy usage more efficient.

Data center efficiency is becoming a greater priority among tech and AI firms as they work with limited energy sources in today’s AI race. After all, even 1% energy loss from these facilities can translate into $460 million in costs each year.

Companies are trying to combat rising power costs of its advanced AI models by improving the efficiency of the chips that power them, however these efficiency gains can only limit energy demand so much.

Servers that power large generative AI models that produce text and images are constantly performing trillions of calculations, which generate tremendous amounts of heat when considering the fact that data centers house thousands of servers. At any given moment, large hyperscale data centers produce enough heat to warm around 35,000 homes through the winter months.

This is why around 30% to 50% of all electricity consumed by these facilities is put toward cooling alone. Traditionally data centers relied on air cooling, but as chips became more advanced and produced more heat, companies have sought different solutions.

Amazon, for example, is now using its own liquid cooling systems to mitigate the heat generated by advanced chips used for complex calculations.

In the midst of the race towards efficiency, EMCOR Group (EME) is positioned to become a behind-the-scenes enabler of data center efficiency and infrastructure build-out.

This construction and engineering firm offers a comprehensive suite of services that includes end-to-end electrical construction for data centers. It can also plan, design, install, maintain, and retrofit sophisticated data center infrastructure and power generation systems.

Its power distribution and fiber optics systems help keep data centers operational and ensure data moves at proper rates. Meanwhile, its cooling and fire protection systems prevent data centers from overheating and detect and extinguish overheating throughout the facilities.

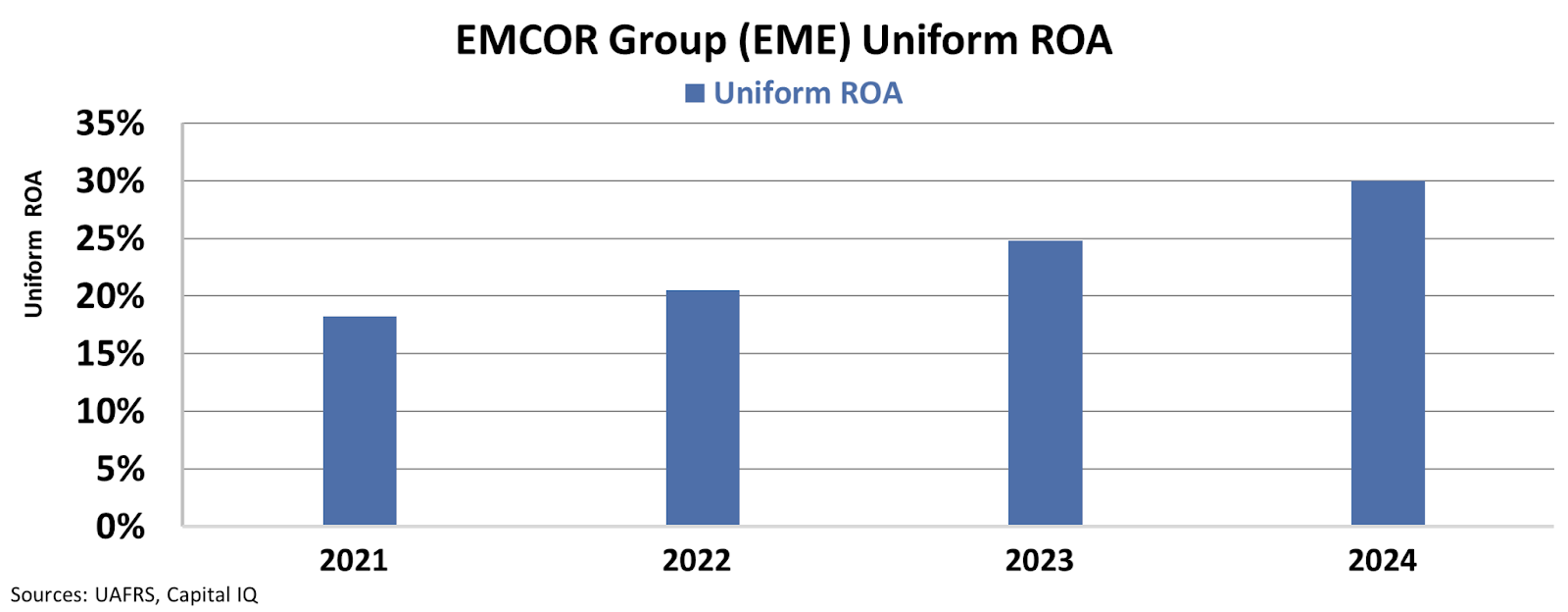

EMCOR is becoming increasingly important to the AI revolution and the need for efficient data centers, and its recent profitability trend reflects this.

Since 2022, EMCOR’s Uniform return on assets (“ROA”) has climbed from 21% in 2022 to 30% in 2024.

As investment in hyperscale data centers and efficiency continue to grow, EMCOR will stand to benefit as its business is uniquely positioned to meet both demands.

As the AI race continues to intensify, the company’s returns could continue their ascent, resulting in upside for investors.

Best regards,

Joel Litman & Rob Spivey

Chief Investment Officer &

Director of Research

at Valens Research